✒️ Foreword

Governance is scaling—or at least, it has to.

The old playbook was built for quarterly risk reports, internal audits, and static registers. But we’re not governing spreadsheets anymore. We’re governing systems that retrain themselves. AI that reasons. Models that adapt to users, environments, and incentives in real-time.

That’s why this edition focuses on movement:

- The shift from manual to machine-assisted GRC

- From ethics as compliance to ethics as a strategy

- From principles on paper to frameworks with teeth

Every spotlight review below speaks to a friction point—and what it looks like when governance tools finally catch up with the systems they’re meant to steer.

📚 This issue's spotlight reviews:

- 📘 The ROI of AI Ethics Marisa Zalabak et al. | The Digital Economist→ Ethics reframed as value generation: how trust, retention, and brand integrity can become part of ROI discussions.

- 📘 AI RMF 1.0 Controls Checklist GRC Library (AI-generated)→ NIST RMF translated into checklist prompts—useful, but not production-ready. Treat it as scaffolding, not gospel.

- 📘 Law & Compliance in AI Security & Data Protection → Legal deep-dive into GDPR, NIS2, and AI Act intersections—strong on convergence, auditability, and joint compliance.

- 📘 The AIGN Framework 1.0 Artificial Intelligence Governance Network→ 60-page operational architecture bridging principle and execution, with agentic risk tools and diagnostic templates.

- 📘 Ahead of the Curve: Governing AI Agents Under the EU AI Act Amin Oueslati & Robin Staes-Polet | The Future Society→ The definitive guide to mapping AI agents onto EU AI Act structures, from GPAI risk to downstream deployer duties.

💡 Food for thought: If a ransomware gang can cite securities law to pressure victims, and a kindergarten with no walls can model transparent oversight—then maybe our frameworks could use a rethink too. Governance doesn’t always need more layers. Sometimes it needs better flow.

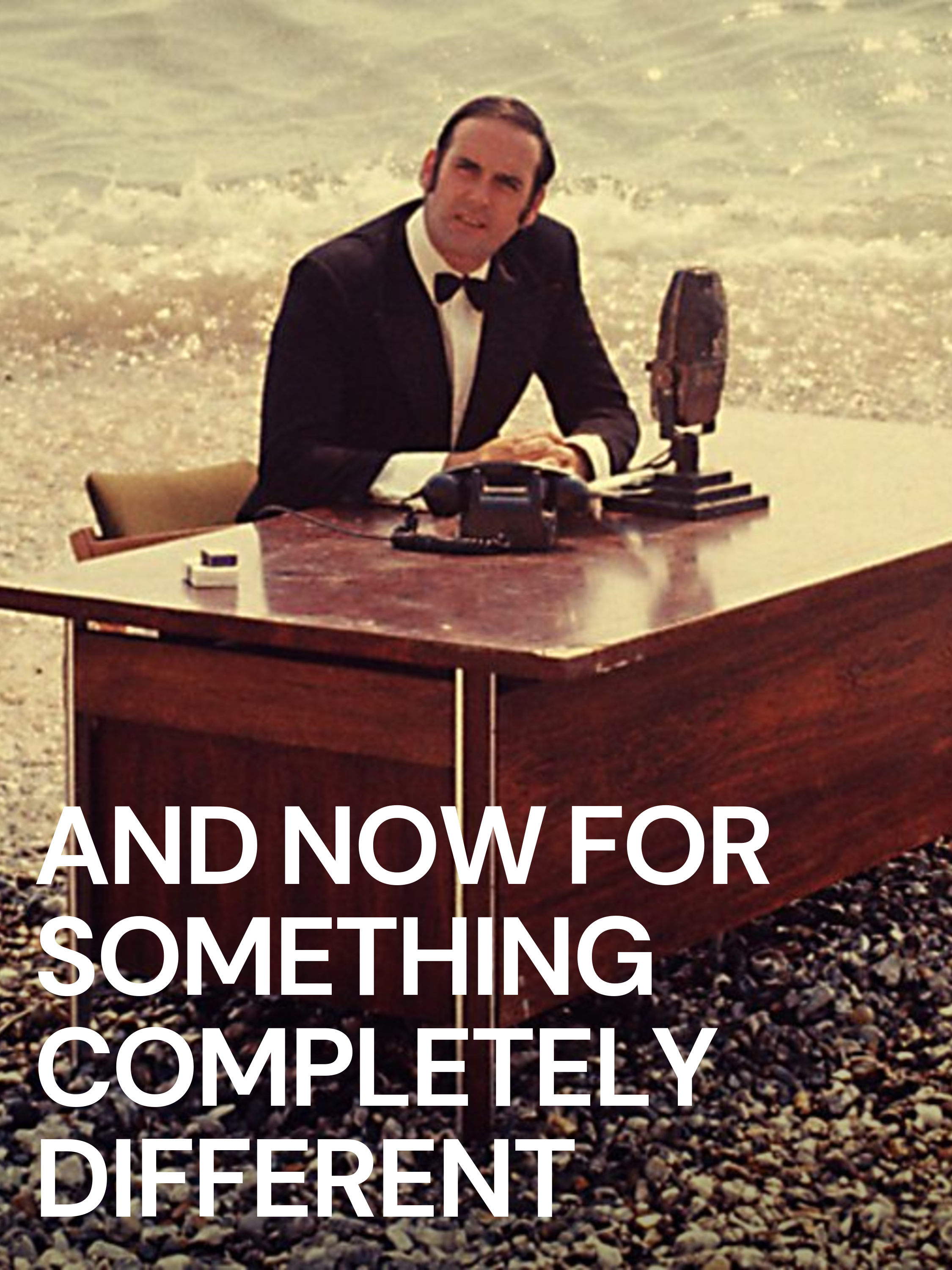

See you in the next issue—

And as always, thank you for reading.

—Jakub

Curator, AIGL 📚

🍽️ Food for Thought - Opportunities of AI-Assisted GRC Tools

Most governance teams are stuck in a paradox: the faster risk evolves, the slower our processes feel. Manual audits. Siloed registers. Excel-based risk maps updated quarterly—if at all. Meanwhile, the systems we’re supposed to govern are learning in real time.

That gap is where AI-assisted GRC tools step in—not as magic solutions, but as pragmatic accelerators. Governance, Risk, and Compliance (GRC) platforms powered by AI can spot policy violations faster, auto-tag sensitive data, flag regulatory mismatches, and even forecast emerging risks before they hit the radar. We’re not talking about replacing human judgment—we’re talking about scaling it.

The GRC Library has started mapping this space with its AI & GRC Tools Directory. It’s an evolving list of platforms applying machine learning, NLP, and automation to risk classification, third-party assessments, and regulatory change tracking. Think of it as a compass for compliance officers and governance teams looking to upgrade from checklists to adaptive oversight.

What’s the opportunity here?

- From static to dynamic risk management: AI helps track changing exposure in real time.

- From reactive to predictive compliance: Tools can pre-empt issues using regulatory horizon scanning.

- From busywork to strategic focus: Automating low-value tasks frees up time for deeper governance design.

💡 Try this: Audit your GRC stack. Which tasks are still being done manually, and which could be assisted—or at least flagged—by machine learning? If AI can monitor your suppliers better than your inbox, it’s time to reallocate your attention.

What’s the risk of not upgrading governance—while everything else gets smarter?

☀️Spotlight Resources

📘 The ROI of AI Ethics

Profiting with Principles for the Future By Marisa Zalabak et al. | The Digital Economist | 2025

🔍 Quick Summary

A bold attempt to reframe AI ethics as a value-generating activity, not a compliance cost. This 35-page position paper offers a structured overview of how ethical AI can yield quantifiable returns—financial, reputational, and strategic—and introduces an expanded ROI framework that integrates ethical dimensions into traditional business analysis.

📘 What’s Covered

The paper advances the concept of Ethical AI ROI, distinguishing it from traditional ROI, GRC ROI, and basic AI ROI by incorporating qualitative and societal impacts. Key contributions include:

- Four ROI models compared: Traditional, AI ROI, GRC ROI, and Ethical AI ROI—with clear formulas, objectives, and value components (p. 10)

- Mastercard case study on operationalizing governance through platforms like Credo AI (p. 6)

- Holistic ROI framework (HROE) capturing direct, intangible, and strategic impacts (p. 15)

- Key value levers: trust, brand integrity, workforce satisfaction, and regulatory alignment

- Side-by-side tables comparing risk, value, and time horizons across ROI types (pp. 10–12, 26–28)

- Real-world cautionary tales: IBM Watson for Oncology, Boeing 737 MAX, Klarna job cuts (pp. 20–22)

It also proposes an “Ethics Return Engine” (ERE)—a calculator model still under development—that would assign scores to risk mitigation, brand lift, and trust outcomes for decision support (p. 24).

💡 Why It Matters

This paper makes a strong argument for moving ethics upstream in AI development—framing it not only as a values-driven decision, but as a strategic hedge against reputational, operational, and legal risks. It recognizes that while compliance may be the floor, stakeholder trust is the ceiling.

By quantifying intangibles (like employee retention and customer loyalty), it pushes ethics into boardroom language. This could help ethics teams make a business case, not just a moral one.

It also ties nicely into global frameworks—referencing ISO/IEC 42001, the EU AI Act, and the UNESCO AI Ethics Recommendations—making it a useful bridge between policy and enterprise risk planning .

🧩 What’s Missing

- The Ethics Return Engine is still conceptual—no tool, dataset, or working model yet.

- Metrics are described broadly, but no benchmark values or case-specific ROI math are shared.

- Intended for executives, but it lacks operational guidance on embedding ethics into workflows.

- Some examples (e.g., Microsoft’s AI fossil fuel partnerships) feel more polemic than analytical.

- May overstate readiness of most orgs to adopt multi-dimensional ROI methods.

This paper is a great conversation starter for ethics, compliance, and strategy teams looking to push AI governance into value discussions. Just don’t treat it as a toolkit—yet.

📘 AI RMF 1.0 Controls Checklist

GRC Library (June 2025)

⚠️ This resource was generated with AI. Use with caution.

🔍 Quick Summary

This document attempts to operationalize the NIST AI Risk Management Framework (AI RMF 1.0) into a control-level checklist for practitioners. It follows the four NIST RMF functions (Map, Measure, Manage, Govern) and expands them into detailed control questions meant to support internal audits or implementation planning.

⚠️ However, the file was produced with the assistance of a generative AI tool named Helena, and explicitly notes it may contain errors. It is not an official NIST document, nor is it endorsed by any U.S. federal agency.

📘 What’s Covered

The checklist maps each NIST AI RMF function to a set of control-level prompts, structured as questions such as:

- “Have you documented the purpose and context of the AI system?”

- “Are known measurement gaps tracked and communicated?”

- “Is there a process to validate robustness under real-world conditions?”

- “Have roles for AI risk management been assigned and communicated across the lifecycle?”

Each control item is designed to support traceability and documentation for audits, procurement reviews, or system assurance discussions. The language generally mirrors NIST’s taxonomy and tone, and references relevant sections of the AI RMF Core Document.

💡 Why It Matters

As the NIST AI RMF gains traction—particularly among U.S. federal contractors and global orgs seeking non-EU-aligned risk tools—checklists like this are a natural next step. The original RMF was never designed to be plug-and-play. This resource tries to fill that gap by translating abstract risk goals into actionable controls.

If your team is looking for a first-pass operationalization of the NIST framework, this checklist can serve as a discussion starter or template seed. Just don’t mistake it for policy-grade material.

🧩 What’s Missing

- ❗ AI-generated: The document openly states that it was created using AI assistance and may contain incorrect or outdated content. Manual verification is required.

- 📉 No supporting evidence or source citations—many control questions are unlinked to specific standards or real-world mappings.

- 🔧 Lacks customization guidance—no prioritization for industry, risk level, or system function.

- 📝 No accompanying glossary, version history, or integration guide for ISO 42001 or other governance frameworks.

- 📄 Contains repeated legal disclaimers warning against formal reliance:

“This content includes AI-assisted information. It may contain errors or incorrect information. Please verify all information carefully before use.” (p. 4)

If you use this resource, treat it like an unreviewed draft—not a standard. It’s a spark for building internal checklists, not a substitute for expert review or official documentation.

📘Law & Compliance in AI Security & Data Protection

🔍 Quick Summary

This is a 60+ page legal primer aimed at practitioners navigating the complex interplay between AI, cybersecurity, and data protection law—particularly under the GDPR, NIS2, and the upcoming EU AI Act. It’s not just an overview; it’s an argument for embedding legal risk thinking into every phase of AI system development and deployment. A solid, text-heavy resource for legal, compliance, and security teams working together.

📘 What’s Covered

The report walks through:

- AI system security obligations under GDPR, focusing on Article 32 (security of processing), DPIAs, and joint controllership concerns.

- NIS2 and cybersecurity mandates that could apply to AI providers, especially those handling essential services or digital infrastructure.

- The AI Act’s approach to risk, including Articles 9–15 on risk management, data governance, transparency, and monitoring.

- Cross-law mapping, including how to reconcile overlapping obligations across laws.

- Governance recommendations, including auditability, breach notification workflows, and the use of pseudonymization/encryption.

It emphasizes the “security by design” principle and makes a strong case for aligning privacy and security strategies early in AI system development. While most sections read like a legal memo, several include actionable checklists and implementation tips.

💡 Why It Matters

Most governance discussions isolate privacy, security, and AI compliance as separate silos. This document refuses to do that—and that’s its strength. It shows how weak security can undermine even the most “ethically aligned” AI system, and how compliance isn’t just about avoiding fines but maintaining trust and operational resilience.

Especially valuable for organizations facing compliance overlap: high-risk AI under the AI Act, plus NIS2 obligations, plus GDPR duties. It helps identify points of convergence, redundancy, and conflict—exactly the kind of groundwork governance teams need when designing integrated controls.

🧩 What’s Missing

- The report is very EU-centric; no significant treatment of U.S. or global approaches to AI security law.

- Not beginner-friendly—assumes strong familiarity with legal terminology and baseline cybersecurity standards.

- Could benefit from visuals: a lifecycle map of obligations, risk flowcharts, or compliance matrices would go a long way.

- Little focus on emerging technical standards (e.g. ISO/IEC 42001) or AI-specific red teaming/incident reporting practices.

🌙 After Hours

Two stories this week that made me laugh, then think — then double-check that they were real.

🔏 That one time a ransomware gang filed a complaint with the SEC against their own victim

A ransomware gang filed a complaint with the SEC… against their own victim — claiming the company didn’t disclose the breach fast enough. That’s right: cybercriminals are now citing U.S. securities law to apply pressure. You can’t make this up (but they did).

It’s a wild one, but not just a joke. If weaponized, this could pressure public companies to pay ransoms faster — or face regulatory fallout. Welcome to the next layer of “AI + risk + incentives” bingo.

Now imagine the next step: automated SEC-form-generating bots, fine-tuned on ChatGPT and threat actor playbooks. Coming soon to a Boardroom Panic Report near you.

🎠 The Kindergarten With No Walls

In Tokyo, Fuji Kindergarten was designed as a giant oval with no walls and a rooftop running track. Kids run an average of 4 km a day, and no one ever tells them to be quiet. Tezuka Architects basically built a Montessori daydream.

Kids flow between indoors and outdoors without boundaries — and somehow it works. It’s like safety-by-design, but with treehouses.

If your governance framework needs a metaphor, maybe make it more like this building. Transparent, circular, and built to keep people moving, not boxed in.